Assessing NASA advisory activities: What makes advice effectiveby Joseph K. Alexander

|

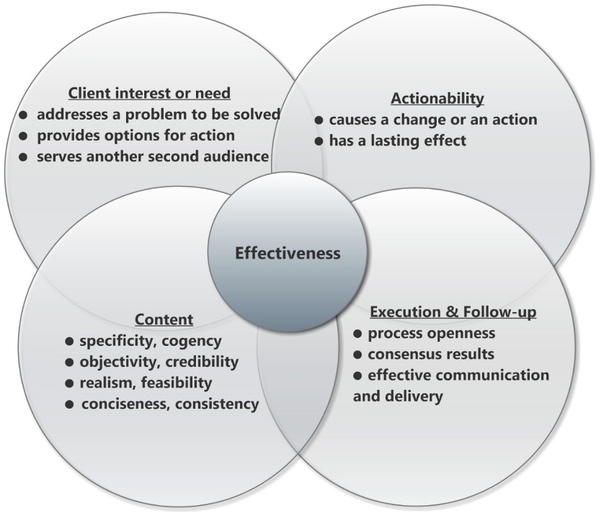

Key factors for effective advice |

Client interest or need

The first key to effectiveness is whether the advisory effort has an accepted purpose and an intended recipient or client who needs and wants advice. Is there a problem that needs to be solved or a decision that needs to be made? Does an agency need to define a way forward, or is there a question that calls for independent expertise? If the answer to any of these questions is “yes,” then outside advice may be appropriate. But need may not be sufficient. There also must be a receptive audience that is willing to accept the advice or a third party that can influence a response.

| There also must be a receptive audience that is willing to accept the advice or a third party that can influence a response. |

The Academies decadal surveys are especially good examples of advice to a waiting and receptive audience. Decadal surveys are developed with broad input from a large segment of the relevant scientific community, and all encompass the following key attributes: (1) a survey of the status of the scientific field at the time of the study, (2) recommended scientific goals for the next decade, (3) a prioritized set of recommended programs to achieve the science goals, and (4) (in more recent years) a decision-making process in the event that budget limitations or technical problems arise to create obstacles for the recommended program. Once astronomers demonstrated the strengths of the decadal survey approach in the 1960s through the 1990s, NASA and congressional officials welcomed decadals for all areas of space science. The surveys satisfied a clear desire for consensus priorities that had the backing of the broad research community.

Four other notable examples of advisory studies that were requested by interested government recipients are the 1976 Space Telescope Science Institute report, the Earth System Science Committee of the 1980s, the 1992 Discovery program committees, and the 1999 Mars Program Independent Assessment Team.

Space Telescope Science Institute report: When NASA was trying to build a case for starting development of the Large Space Telescope (later named the Hubble Space Telescope) in the early 1970s, planners focused both on the flight hardware designs and on the post-launch scientific aspects of the program. Two competing concepts for ground operations emerged: (a) Goddard Space Flight Center’s preference to co-locate scientific operations with the engineering control center for the spacecraft and telescope at NASA or (b) outside astronomers’ preference for an independent scientific institute that would be managed by an organization such as a consortium of universities.

By the mid-1970s, Noel Hinners, who was then Associate Administrator for Space Science, already had his hands full dealing with challenges posed by the program’s budget and a political fight to gain congressional approval for the program. He didn’t need another battle with the scientific community at that time. Consequently, Hinners arranged for the Academies Space Science Board to organize a study to examine possible institutional arrangements for the scientific use of the telescope.[3]

The committee’s report—“Institutional Arrangements for the Space Telescope”—offered a core recommendation that a Space Telescope Science Institute should “be operated by a broad-based consortium of universities and non-profit institutions…The consortium would operate the institute under a contract with NASA.”[4] The report recommended operational functions, structure, governance, staffing, facilities, arrangements for interactions with NASA, and location of the institute. NASA largely accepted the committee’s recommendations, and they were adopted when the Space Telescope Science Institute was established in 1981. The institute has been enormously successful, not only in operating Hubble but also now in operating the James Webb Space Telescope.

The report nicely illustrates an advisory effort that met NASA’s needs and provided actionable advice that had a significant lasting impact. Noel Hinners wanted a way to resolve the conflict between Goddard and the astronomy community, he wanted independent guidance on how to maximize the long-term scientific value of the Space Telescope program, and he wanted to be able to build a positive relationship with the community that would shore up their willingness to be advocates for the program. Hinners probably also wanted cover; he had a good idea of what he wanted to do, but having a National Academy of Sciences committee behind him made his future decisions much more palatable.

Earth System Science Committee: In the early 1980s, NASA officials sought to build a rationale for a space-based Earth observing system. NASA’s Director of Earth Science Shelby Tilford arranged to form an Earth Systems Science Committee under the auspices of the NASA Advisory Council, to be chaired by National Center for Atmospheric Research Director Francis Bretherton. Working over a period of more than five years, the committee articulated a compelling scientific rationale for studying the Earth as a complex, interactive system, and it provided a scientific basis for NASA’s Earth Observing System program, which began in 1991.

Eric Barron, an oceanographer who joined the Penn State faculty in 1986 and who subsequently has served in many scientific and academic leadership positions, recalled his impressions about the importance of the report:

“The Bretherton Committee’s Report … spurred all sorts of different … including changing how advice was given to the federal government, particularly in the National Academy of Sciences. What you saw emerging was a Climate Research Committee… the Board on Atmospheric Sciences and Climate, the Committee on Global Change Research … you had those three groups, and then some others, that all began to interact within the same arena … I view those committees as the ones that stepped in following the Bretherton Report specifically to look and evaluate many different programs.”[5]

Discovery Program advisory committees: When former Jet Propulsion Laboratory (JPL) cosmochemist Wes Huntress became Director of Solar System Exploration at NASA in 1992, one of his goals was to start a program of low-cost planetary missions that could be built and launched more frequently than in the past and that could be worked into the budget in hard times as well as good times. His idea was initially controversial because he proposed a program of missions that would be managed by individual principal investigators who would be selected through open competition. The conventional wisdom, encouraged by JPL, was that planetary missions were too big for NASA to give full development responsibility to a single lead scientist outside NASA and too costly to be feasible.

Huntress formed two ad-hoc advisory committees—one for science and one for engineering—to evaluate the low-cost mission concept in depth and to specify what attributes would be needed to make it work. Huntress recalled how he got the results that he hoped for:

“And I just let them go at it and have the NRL [Naval Research Laboratory] folks and the APL [Johns Hopkins Applied Research Laboratory] folks kind of teach everybody how to do low-cost spacecraft and people experienced in planetary teach the low-cost spacecraft guys what the idiosyncrasies of doing planetary are. And so we spent a couple of years convincing our science community ‘Yeah, maybe this will work.’ The scientists liked the idea of the missions being PI-led and proposed, and the engineering community became convinced that you could do low-cost planetary missions.”[6]

NASA transformed the committees’ deliberations into the Discovery program, and it proved to be a great scientific and strategic success and a critical element of NASA’s solar system program. Fourteen Discovery missions have been launched since 1996, with only one failure.

Mars Program Independent Assessment: In September 1999, Mars Climate Orbiter, which had been developed under NASA Administrator Daniel Goldin’s faster-better-cheaper approach, failed to go into orbit at Mars. Then Mars Polar Lander failed to land safely in December 1999. Understandably alarmed by the failures and determined to fix the Mars program as quickly as possible, Goldin and his Associate Administrator for Space Science, Ed Weiler, recruited space program veteran Tom Young to lead an ad-hoc Mars Program Independent Assessment Team.

| “A good report was developed, but it would have just been put on a shelf if there had not been competent, capable people to receive the report and integrate the recommendations that we had, and that happened.” |

The team’s report in early 2000 found that “There were significant flaws in the formulation and execution of the Mars program”[7] that included lack of discipline and of defined policies and procedures, failure to understand prudent risk, unwillingness at JPL to push back when headquarters-imposed cost or schedule constraints were dangerously tight, and an overall focus on cost instead of mission success. The report identified best practices from successful missions and areas for future attention regarding project management responsibility and accountability, testing and risk management and decision making, budgeting, and institutional relationships as well as others.

Weiler used Young’s report to restructure the Mars program, starting with appointment of Ames Research Center executive, Scott Hubbard, as Headquarters Mars Program Director, and clarifying lines of responsibility and authority between Headquarters and JPL. With the benefit of the Independent Assessment Team’s advice, Weiler, Hubbard, and JPL were able to get the program back on track so that NASA enjoyed nine Mars mission successes starting with Mars Odyssey launched in 2001 and continuing through the Mars Perseverance Rover launched in 2020.

Tom Young summed up his views on the importance of having a willing and capable audience as follows:

“It’s one thing to have a good report and secondly to have someone to deliver it to who knows what to do with it…There were people who not only knew what to do with it, but were genuinely interested in getting the report and wanting to respond to the recommendations…A good report was developed, but it would have just been put on a shelf if there had not been competent, capable people to receive the report and integrate the recommendations that we had, and that happened. And I think that happened in a manner that kind of set the stage for the Mars Program moving from what was clearly a low…to what since then has been an extraordinary series of successes.”[8]

All the examples illustrate the point that advice has a better chance of being used when the recipient wants it and asks for it. Maybe that should be obvious, but it’s still important.

Sometimes the advisee doesn’t seek or want advice, but a third party does and insists that the process goes forward. Often the advice delivered at the request of a third party turns out to be important. A notable example is the Academies assessment of options to extend the life of the Hubble Space Telescope.

Extending the life of the Hubble Space Telescope: Following the disastrous loss of the Space Shuttle Columbia in February 2003, NASA Administrator Sean O’Keefe canceled a planned Hubble Space Telescope (HST) servicing mission, saying that for safety reasons there would be no more shuttle flights to HST. That did not sit well with Senator Barbara Mikulski of Maryland, who had an interest because two key HST institutions—the Space Telescope Science Institute and the NASA Goddard Space Flight Center—were in her state and because she chaired the Senate appropriations subcommittee that handled NASA’s budget. In March 2004, Senator Mikulski directed NASA to engage the Academies for an independent evaluation of options for extending the life of HST.

The Space Studies Board (SSB) and the Aeronautics and Space Engineering Board jointly organized a study committee and recruited physicist Louis Lanzerotti to serve as chair. The committee met for the first time in early June and prepared an interim report, at NASA’s request, in mid-July, saying that HST was worth saving, that there were significant uncertainties about the feasibility of robotic servicing, and that NASA should take no actions that would preclude a shuttle servicing mission until the committee completed its assessment.

The committee’s final report was delivered, briefed to NASA and congressional officials, and released to the public in December. The report concluded that NASA should send the Space Shuttle to service HST and that robotic servicing was not recommended.[9]

The report was well received on Capitol Hill and in the scientific community. When Congress passed the NASA Authorization Act for 2005,[10] it included language calling for a shuttle mission to HST so long as it would not compromise astronaut safety. In October 2006, NASA Administrator Michael Griffin reversed O’Keefe’s decision and announced that NASA would fly one more shuttle servicing mission to HST.

The final HST servicing mission in May 2009 turned out to be a roaring success. Hubble subsequently outlived even the most optimistic projections of its life after repairs, and it still performs well today.

So, the central questions are “Will the effort satisfy a need?” and “Will it respond to an appeal for outside help?” Successful advice begins by recognizing where there is a need, a problem, or a question and then making the advice relevant to that need. Fundamentally, successful advice addresses an itch that needs to be scratched.

Actionability of the advice

Of course, effective advice must have more than a receptive recipient or patron. It must be actionable. One can look at this critical aspect in at least three ways. First, does the advice help lead to a change or action? Second, is the advice timely and available in time to be used effectively? Advisory reports that take longer to prepare than the time scale on which budgets, personalities, or institutional interests change are not likely to be very useful. Third, does the advice have an appropriately lasting effect? Fleeting ideas don’t make much of an impact.

| Advice can go to a willing audience and address important issues and still fall flat. How the advice is framed and how it is communicated become very important factors in whether the advice is heard and considered. |

Our poster children for successful reports—the decadal surveys—are distinguished by the fact that government officials have generally worked hard to follow the recommendations and priorities. Issues of cost, technological complexity, agency budget realities, programmatic factors, and politics often impact an agency’s ability or willingness to implement the recommendations, but the decadals still present a standard by which to measure the utility of outside advice.

The Earth system science, Discovery program, Space Telescope institute, Mars program assessment, and Hubble servicing efforts all led to actions that persisted as long as the need existed. Perhaps the most challenging aspect of the Hubble servicing study was that the committee had to complete its work in a short time. They rose to that challenge and delivered their advice in less than eight months—a span that amounts to near record time for Academies studies.

Content and preparation of the advice

Advice can go to a willing audience and address important issues and still fall flat. How the advice is framed and how it is communicated become very important factors in whether the advice is heard and considered. The most successful cases of advisory activities share most of the following attributes.

Specificity: Cogency and specificity are particularly important if advice is going to be useful and effective. Is the advice substantive? Does it include a clear path for action? Is it convincing and compelling? Is it explicit and free of code words or concepts that befog what advisors really intend?

The report on a Space Telescope science institute, the Discovery program study teams, and the Hubble Space Telescope servicing study all had very explicit and well-argued recommendations on which NASA could act. Decadal surveys are, by their nature, distinguished by their recommendations for explicit priorities. Experience has shown that the more explicit one can be, the more likely one can get the attention of decision makers in OMB as well as in Congress. On the other hand, subtle statements don’t often work.

Objectivity and credibility: Advice also needs to be viewed as objective, fair, and credible, and these attributes become increasingly important when the advice addresses uncertain, complex, or controversial topics. Advisors’ clout depends upon how people outside the advisory process view the process. Are the advisors really experts? Are they community leaders? Do they have acceptance in the community as being consensus builders?

There can be a fine line between building consensus around the majority views of a community on the one hand and the destructive consequences of arbitrarily freezing out contrary points of view on the other. That’s why an advisory group’s objectivity, fairness, breadth, balance, independence, and stature are important to gaining acceptance once advice is delivered.

The members of the Hubble servicing study were notable for their stature and expertise. The committee was led by a chair whose reputation for evenhandedness and demanding standards was exceptional, and the committee itself brought extraordinary depth of expertise in all the areas that were relevant to the topic. Perhaps the most important and lasting impact of the Earth System Science Committee was its ability to bring a diverse research community together and to forge a consensus in which Earth scientists thought about their fields in a new, integrated way. And of course, the success of the decadals is very much a consequence of the survey committees’ reliance on community leaders who undertake a broad outreach effort to build consensus and community ownership of the final product.

Former NASA planetary science director Wes Huntress attributed much of the success of the two teams that developed the Discovery program of small planetary science missions to the fact that the members were able to bring the full range of points of view about the concept’s feasibility to the debate:

“[D]evelopment of the Discovery program required a great deal of outside advice from not just the science community but from the engineering community as well on how to craft a program that was not in the experience base, or even the desire, of these communities. An advisory group of engineers with members from outside organizations like APL and NRL with experience in low-cost missions had to be brought into the process to counter JPL’s flagship-mission proclivities. The science advisory group wrestled with their culture of vying for space on large missions. The crafting of the Discovery program had to deal with a myriad of counter-culture scientific and engineering issues.”[11]

Independent outside advice can also play another kind of role. Government science officials often must make decisions that are likely to be controversial, even though the necessary course is clear. But with or without controversy, there’s a great benefit to be gained from being able to share ownership of a decision with the scientific community. Consequently, a key value of advice can be to independently confirm and support a direction that officials expect to take.

Former NASA science Associate Administrator Ed Weiler put the situation succinctly when he said, “I like having air cover. If I were a general I wouldn’t attack without air cover.”[12] There is a rich legacy of SSB reports that assessed changes that NASA was considering in order to reduce or simplify the scope of planned space missions and where the SSB reviewed and endorsed the proposed NASA changes, thereby granting a blessing on behalf of the broad scientific community. So reinforcement is important, if it is substantiated.

Realism and feasibility: Among the first questions that government officials ask upon receiving advice, even in the most welcoming circumstances, are, “Do the recommendations define what actions are needed; what will it cost to act on the recommendations, and can we afford it; and are the recommended actions within our power and capabilities?” Thus, effective advice must pass the tests of affordability, achievability, and actionability.

| A classic case of scientific advisory committees losing touch with reality relates to recommendations to reorganize the government. |

The Discovery program teams mentioned above were notable for the fact that they addressed ways to solve a problem by reducing costs. The Discovery advisory group experience provides an interesting contrast with NASA’s Solar System Exploration Committee (SSEC) that tackled affordability issues a decade earlier.[13] The SSEC temporarily arrested the nearly disastrous freefall of the planetary science program by recommending two new mission classes—Planetary Observers and Mariner Mark 2. However, neither scheme proved to be feasible or affordable in practice, and they both went away shortly after initial attempts to pursue them. Discovery, on the other hand, proved to be a continuing success because of its affordability and associated scientific and management strengths.

A classic case of scientific advisory committees losing touch with reality relates to recommendations to reorganize the government. After considering feasible solutions, or perhaps failing to focus on feasible solutions first, many committees lunge for the idea of recommending a reorganization and reassignment of responsibilities within or across government agencies as their preferred solution. Such recommendations rarely, if ever, succeed. For example, the 1985 Space Applications Board report[14] that flagged an unacceptably incoherent and uncoordinated federal Earth remote sensing program and rigid and divisive relationships between NOAA and NASA, recommended moving NOAA out of the Department of Commerce. Advisors who are technical experts usually understand the complexities of technical issues, but perhaps they have trouble appreciating that obstacles posed by established bureaucracies can be even more formidable and beyond the advisors’ reach.

Conciseness: The appropriate length of an advisory report and the amount of detail that is needed to back up advisors’ recommendations can be complicated, but the bottom line is almost always, “Keep it crisp, concise, and to the point.” Senior agency officials at NASA and OMB and congressional staffers have generally argued that the most useful reports are short, focused, and prompt. Such pieces of advice only include as much data and elaboration as is needed to make the case, and no more. Senior officials don’t have time to read long documents, and furthermore, they often don’t have time to wait for an answer—or so the story goes.

Let’s look at a few examples of conciseness before getting to the exceptions. The report on Institutional Arrangements for the Space Telescope was loaded with quite specific points about the rationale, roles, and structure of an institute, but the authors covered it all in just 30 pages plus a few appendices. Tom Young’s Mars Program Independent Assessment Team distilled their findings down to a 13-page narrative summary and a set of 65 incisive briefing charts.

The letter reports that were prepared by the SSB and its standing committees before 2007 were usually only a few pages long, and they rarely ran over 20 pages. They rarely required discussion of data or analytical efforts to support their conclusions, but instead they often pointed to prior work by the same advisory bodies to underpin the conclusions.

Beginning in 2017, the SSB received Academies approval to issue what are called “short reports.” These successors to earlier letter reports are prepared by the SSB’s standing discipline committees; they address relatively narrowly focused tasks that are within a committee’s areas of expertise; and they present consensus findings but no formal recommendations. For example, the SSB’s Committee on Planetary Protection completed three reports between 2020 and 2022, each of which were between 50 and 70 pages in length. To date the SSB’s committees have produced 14 such short reports covering the full range of space science disciplines.

So, when might brevity not be a virtue? When a subject is particularly complex or far-reaching, there are clear reasons for the advice to be accompanied by more detail than can be shoehorned into a brief report. Sometimes detail is necessary to provide an evidentiary basis or in-depth analysis or simply to outline the background for conclusions in adequate detail. There are also occasions when an advisory report is written for multiple audiences, and in those cases the level of appropriate detail may differ from one audience sector to another. This is usually the case for decadal surveys and other major scientific discussions where the report is intended to be read and appreciated both by students and members of the scientific community, who will want substantial scientific detail, and also by program officials and policy decision makers, who will want to get to the bottom-line advice. In these cases, the structure of the advisory report becomes especially important.

Two other examples are interesting. The Earth System Science Committee, under Francis Bretherton, took five years to complete its work. But the committee didn’t make the world wait for its final report. Instead, it first prepared a very succinct summary of the emerging Earth systems science concept that was basically a brochure called an “Overview.”[15] It then followed later with a document of about 30 pages—called “A Preview”—that was the equivalent of an extended executive summary. And finally, the full report appeared, called “A Closer View.”[16] The ESSC was working to bring along the relevant scientific communities, and so taking some time to develop and articulate the arguments in depth was probably a good strategy.

The Hubble servicing report, which Lanzerotti’s committee prepared in only about six months, is an example of a different sort. The committee had to analyze all the dimensions of the problem—value of Hubble, projected lifetime of Hubble, maturity and outlook for robotic servicing, outlook for Space Shuttle performance, and absolute and comparative risks—and give NASA and Congress a timely assessment. The committee’s report did so in a document of only about 110 pages plus appendices. The study was a heroic effort, both in scope and turnaround time, that went well beyond what the Academies can ordinarily accomplish.

Consistency: Given the many witty and sometimes wise aphorisms both lauding but often belittling consistency, what are advisors to make of consistency? The National Academies make a big deal of consistency. While there may be no formal policy, there is an expectation that new advice rendered by an Academies advisory committee will be consistent (or at least not inconsistent) with prior Academies advice on the same subject. This shouldn’t be too hard in general; if a committee gets things right the first time, then a later properly reasoned study should get the same answer. Academies committees often cite prior advice while justifying conclusions in a new study. This kind of consistency can have substantial impact on the credibility of the advice when an audience can see an historical chain of data and reasoning on which new conclusions are drawn. Certainly, the converse situation—advice that changes direction or appears to be unstable—will not instill confidence that today’s position won’t change again tomorrow. So as a rule, consistency in advice can be a virtue.

| An advisory group’s work is rarely completed just by tossing its advice over to a government official and declaring success. |

But the environment in which advice is developed and offered isn’t static. To the contrary, new scientific or technological developments can lead to compelling new scientific opportunities and possibly new priorities. Likewise, the political and programmatic environment can change and thereby change the boundary conditions that define what is practical and feasible and what is not. For example, a large investment in a project to pursue a high-priority scientific question may become so costly in a newly constrained budget environment that it is either no longer affordable at all or not affordable without doing significant damage to the rest of the scientific program.

This is basically the situation in which the organizers of decadal surveys found themselves in the 2010s, and the debate about how to marry consistency and pragmatism became serious. For instance, should future midterm assessments in the interval between decadal surveys avoid any tinkering with priorities recommended by the surveys and accept them as gospel? Should new decadal surveys accept priority missions and projects from the prior survey as gospel or should they all be fair game for revision?

Certain key aspects of the decadal surveys and their predecessors have been highly consistent from one version to the next. For example, the major scientific goals articulated by the authors of SSB discipline-oriented science strategy reports before decadal surveys still comprise a reasonably consistent train of scientific priorities. Furthermore, all Academies science strategy reports going back to the beginning and all parallel advisory documents from NASA’s internal committees have emphasized a handful of critical issues concerning the health and robustness of the space sciences. These recurring themes have included the need for a balanced portfolio of spaceflight mission sizes; balance between investments in missions and supporting research and technology; vigorous flight rates that reduce gaps between missions; and development of the technical workforce. Thus, one can readily find threads of consistency in advisory history even while practical realities and new scientific discoveries have caused priorities and approaches to evolve and adapt over time.

Execution and follow-up

The fourth key factor in influencing the effectiveness of advice—after considering audience interest and the utility and the content of the advice—relates to the process itself. “How was the advice developed and delivered; was the process open and consultative; did it emerge from serious deliberation, and did it represent a clear consensus; and was it communicated appropriately?” These aspects of the execution of the advisory process all depend heavily on having an established process and a strong chair or other leader of the group of advisors.

Both NASA’s own committees and the Academies have processes that have been established over decades. The NASA process follows FACA requirements, which include providing for balanced advisory group composition, open meetings and deliberations, and an overall structure that is established or reaffirmed every few years by the NASA Administrator. The Academies process follows the dictates of FACA section 15, as interpreted by the Academies, including a rigorous committee member appointment process that follows FACA and institutional guidelines and a rigorous peer review process for its advisory reports. The Academies process stands in contrast to NASA’s own for its internal committees, not only because it may be more rigorous but also because it is usually, and significantly, more time consuming and slower to deliver answers. The most successful advisory products also have had systematic approaches for gathering data, information, and outside points of view.

Strong chairs have been crucial to many important advisory activities, and indeed, most of the particularly successful advisory studies highlighted earlier benefited from having strong chairs at the helm. The best chairs have been able to command respect and to lead their colleagues to consensus via a process that has been accepted for its fairness, rigor, and realism. The best chairs also have used an array of tools, including writing op-ed columns and arranging private meetings with members of Congress, to get their message out about the scientific community’s views and advice. One particularly active past chair has referred (positively) to these tools as opportunities for “misbehaving,” but so long as the chair respects the integrity of the advisory institution and knows where to draw a line, a chair who isn’t afraid to push the envelope can have an extraordinary impact. The business of chairing an advisory activity can be time-consuming, especially in Academies studies, and so it requires genuine commitment. Former congressional staffer and SSB Director Marcia Smith emphasized the singular importance of a chair for an Academies study as follows:

“[T]he key to almost everything is the chair of the committee. And if you have a chair who is really widely respected to begin with, the committee members are going to defer to that person and that person is going to know how to get a decent consensus and, yet, still have strongly worded recommendations. So, I think the chair of the committee has a lot of influence on what actually comes out even through the review process.”[17]

Tom Young, who himself has earned an extraordinary reputation as a leader of important advisory studies, recalled the impact of one legendary SSB member in the 1970s, Caltech geophysicist Jerry Wasserburg, who led the Committee on Planetary and Lunar Exploration then. Young felt that the committee’s influence was largely a consequence of the fact that Wasserburg stayed engaged with NASA leadership, especially the administrator.[18]

Finally, our list of key success factors must include follow-up. An advisory group’s work is rarely completed just by tossing its advice over to a government official and declaring success. The advisory ecosystem is sufficiently complex and multifaceted that often there are multiple audiences—not only agency program managers and senior officials but also other executive branch staff members, members and staff from Congress, and the space community—that have a stake in the implications and implementation of the advice. Consequently, the most effective advisory groups ensure that there are provisions for communicating their advice widely.

Follow-up also can involve longer-term stewardship of the advice. For example, members of Bretherton’s Earth System Science Committee built momentum throughout the scientific community for the committee’s new way of looking at the Earth sciences. In what has been typical for many independent advisory studies, Tom Young testified at a congressional hearing about the results of his team’s Mars program assessment, and the chair and several members of the Hubble Space Telescope servicing committee gave extensive congressional briefings about their findings. The chair of the 1991 decadal survey for astronomy and astrophysics, John Bahcall, took his job so seriously that he famously committed himself to watch after the survey’s recommendations for the full decade following its completion. In contrast, advice that has been shipped quietly to “current occupant” has rarely had any impact.

Advice vs. advocacy vs. special pleading

It is natural, and for that matter important, for someone on the receiving end of advice to ask whether the advice is objective and credible. Likewise, others who might want to assess the advice may well ask whether the advice represents the special interests of the advisors or the broader technical and programmatic context of the subject of the advice. In other words: “When does advice become advocacy? Is advocacy necessarily a bad thing? And when does advocacy become special pleading?”

| One can argue that when advocacy becomes special pleading, it is no longer credible as advice. |

First, almost all advisory studies have an element of advocacy. The space community is such a small community that basically everyone has an interest in the outcome of the advice that it provides. No one expects a decadal survey committee to say, “This scientific field isn’t worth it; don’t pursue it.” Instead, the surveys are organized on the premise that they address important scientific areas that are worthy of support. And thus, the members of a survey committee are, at a basic level, advocates for the field. That should be accepted as given when viewed in the context of the breadth of the subject about which they are charged to advise.

Special pleading, on the other hand, can occur when its proposers take a position so narrowly that objectivity is lost. One can argue that when advocacy becomes special pleading, it is no longer credible as advice.

Two characteristics help distinguish the former from the latter. The first relates to the breadth of the topic of the advice (and of the advisors) and the diversity of possible advisory conclusions that could be presented. For example, all decadal surveys span a broad range of sub-disciplines and topics within their scientific field. Astronomers weigh the importance of studying extrasolar planets, stars, novae, dust, galaxies, and many other kinds of cosmic stuff, and they consider a great range of both ground-based and space-borne tools to conduct their studies. Solar system exploration survey committees consider competing arguments for research on rocky planets in the inner solar system, icy gas giants in the outer solar system, and a host of primitive bodies and material such as comets, moons, asteroids, and interplanetary debris, and they also assess the merits of focusing the research from differing perspectives such as biology, geology, geophysics, atmospheric science, or plasma physics. The surveys for other fields have a similarly daunting range of perspectives and areas of concentration to consider.

In each case, the survey committees bring together a diverse group of experts who can collectively discuss and debate all the areas under the survey’s purview. Individual committee members might be major movers and shakers in just one aspect of the field, but as a group the participants create a very broad and deep assessment of the whole field. That assessment usually presents an eloquent advocacy document for the field, but the process also protects the results from becoming narrowly self-serving for just one point of view or idea for the future direction of the field. In other words, there are enough opportunities for conflicting opinions within the membership of the group to prevent special pleading to prevail.

The second important distinction between objective advice and special pleading relates to making choices. When advisors weigh alternatives, assess competing positions, and rank their recommendations, then it becomes very hard to be persuasive about a single topic in the absence of a larger context. The poster children of effective advice—the decadals—meet this distinction by debating and recommending explicit priorities after weighing a large menu of competing choices.

Concluding thoughts

We began this discussion by noting four major areas that often determine whether advice is effective. We could have easily posed the criteria as four sets of questions:

- Is there an audience or client who needs the advice and understands that it is needed? Is there a likelihood that advice will be welcomed?

- Is the advice framed in a way that offers practical, affordable, realistic recommendations for action?

- Is the advice supported by clear, substantive arguments and/or data that provide a basis for the advisors’ conclusions? Are the arguments useful to stakeholders who need to understand the advice?

- Are the advisors prepared, and able, to deliver and communicate their conclusions to all relevant stakeholders?

The examples of advisory activities discussed above are notable for being able to answer most of these questions affirmatively, and there have been many more like that over NASA’s history. Unfortunately, there have also been examples where the advisors failed some of these tests, and their reports have largely been relegated to bookshelf decorations.

Endnotes

- All of the case studies are discussed in Science Advice to NASA: Conflict, Consensus, Partnership, Leadership.

- Isaac Asimov, “The Evitable Conflict” (I, Robot, Bantam Books, New York, NY, mass market reissue, 2004) pp.243-244.

- For a good view of Hinners’ thinking about the institute, see Hinners’ interview by Rebecca Wright for the NASA Headquarters Oral History Project, 19 Aug. 2010, pp. 3-4.

- National Research Council, Institutional Arrangements for the Space Telescope: Report of a Study at Woods Hole, Massachusetts, July 19-30, 1976 (The National Academies Press, Washington, DC, 1976) p. vii.

- Barron interview, NASA “Earth System Science at 20” Oral History Project, 1 July 2010. p. 6.

- Author interview with Wes Huntress, Nov. 4, 2013.

- Mars Program Independent Assessment Team, Mars Program Independent Assessment Team Summary Report, (NASA, Washington DC, March 14, 2000, p. 12.

- Project Management Institute interview of Tom Young published 7 November, 2013 on YouTube.

- Space Studies Board and Aeronautics and Space Engineering Board, Assessment of Options for Extending the Lifetime of the Hubble Space Telescope: Final Report, National Research Council, The National Academies Press, Washington, DC, 2005).

- NASA Authorization Act of 2005 (P.L. 109-155), enacted in December 2005.

- Huntress email to the author, 1 Nov. 2013.

- Author interview with Edward Weiler, Aug. 14, 2013.

- See “The Survival Crisis of the U.S. Solar System Exploration Program” by John M. Logsdon in Exploring the Solar System: The History and Science of Planetary Exploration, edited by Roger D. Launius (Palgrave Macmillan, 2013, pp. 45-76) for a comprehensive discussion of the origins of the SSEC.

- Space Applications Board, Remote Sensing of the Earth from Space: A Program in Crisis (National Research Council, National Academy Press, Washington DC, 1985).

- Earth System Science Committee, Earth System Science: Overview, a Program for Global Change (NASA Advisory Council, NASA, Washington, DC, May 1986).

- Earth System Science Committee, Earth System Science: A Closer View (NASA Advisory Council, NASA, Washington, DC, January 1988).

- Author interview with Marcia Smith, Sept. 3, 2013.

- Author interview with Thomas Young, Feb. 20, 2014.

Note: we are using a new commenting system, which may require you to create a new account.