The unnatural selection of planetary missionsby Ralph Lorenz

|

| Like kids in a playground soccer game, superstars get picked quickly as mission teams get put together. And what about the not-so-cool kids left behind? Well, that’s NASA’s pool of reviewers. |

But then they rarely do, which is the problem. Cooped up in an anonymous hotel with two dozen other reviewers for a week, they are kept well-fed to sustain long hours of dissection, poring over hundreds of tightly-written pages of proposals. It’s tough work, leaving little time for exercise or relaxation. “I’ve gained 10 pounds, I’m just sitting and reading all the time,” rues one anonymous reviewer. After they individually read dozens of proposals at their home institutions and identify strengths and weaknesses, they caucus in teleconferences that are often seven hours long—sometimes 13 hours—to reconcile different opinions and correct misunderstandings, and agree on wording of their reviews. In a couple of plenary meetings at the aforementioned anonymous hotel, the reviewers present their assessments to NASA, to help it decide its next planetary exploration missions.

On February 18, 2015, NASA received 28 proposals to its Discovery program of low-cost (approximately $450 million) planetary missions, starting this months-long review process. Discovery missions are proposed by individual scientists to answer specific questions. Some broad areas of exploration are best addressed by large “Flagship” missions like Cassini and Curiosity, but these missions take a decade to fully take shape and expose the program to cost risk and delay. In the bad old days of the 1980s, only a few missions were in development and they grew bigger, and farther and farther apart. NASA recognized the need to implement a faster turnaround of some smaller missions to balance the program. In theory, these smaller missions would also accept a higher level of risk to do things more cheaply or efficiently.

An important feature of these missions is that they are “PI-led”, with a single scientist, the principal investigator (PI), deciding the goals of the mission, and the team to execute it. This entrepreneurial approach fosters innovation, and provides a pathway for a broader community to devise and execute planetary missions in a more cost-effective manner.

| The challenge of any scientific peer review, of course, is that the people best qualified to evaluate your proposals are also likely to be your competition. |

Even for Flagship missions, many of the instruments are competed in much the same way. When the call for instruments for NASA’s Mars 2020 rover went out a year ago, there were over 50 submissions—a symptom, perhaps, of the desperation of the planetary community. With selection rates for research grants falling, and such grants only lasting three years, involvement in a mission brings some (admittedly precarious) continuity to a scientist’s career, and universities see missions and instruments as prizes to be won, sustaining not just funding but also media attention for years to come.

There are only so many scientists skilled in the development of instruments or in the application of specific techniques. Like kids in a playground soccer game, these superstars get picked quickly as mission teams get put together. Some others get signed up to plug skill gaps, or to make a team more well-rounded in its age or gender balance, or sometimes—science is a human process, after all—just because they’re good friends with the “team captain”, the PI. And what about the not-so-cool kids left behind? Well, that’s NASA’s pool of reviewers.

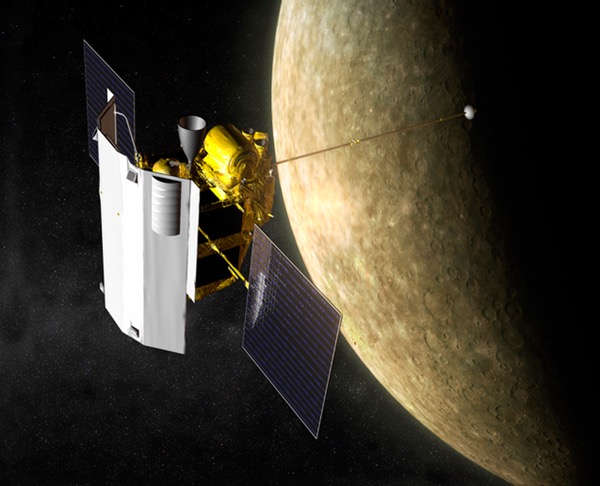

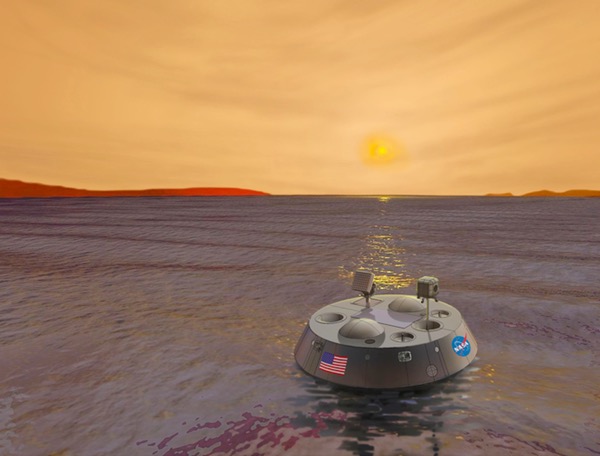

The Titan Mare Explorer (TiME) mission made it to the top three of the 28 proposals in the last Discovery solicitation in 2010. It underwent a more detailed study for 10 months, but was not selected. Since it relied on a planetary alignment to transmit data direct to Earth from Titan’s northern polar seas during their summer, it cannot be reproposed. (credit: NASA) |

The challenge of any scientific peer review, of course, is that the people best qualified to evaluate your proposals are also likely to be your competition. NASA Discovery Program Scientist Michael New has expressed frustration with the challenge of finding unconflicted reviewers: the last Discovery call in 2010 needed 65 scientists and 85 engineers to assess the 28 proposals received, each of which had an average of 20 scientists involved! Finding 65 qualified scientists not at institutions hosting any of the hundreds of proposers is no easy task, especially when the review process makes such demands on its participants. When asked why they volunteered as a reviewer, one scientist answered, “The stakes were so high for the community. Which mission flies changes the course of what research is done for decades; someone has to do it or else the system breaks.” Ultimately, these unsung reviewers help improve the quality of the missions that eventually fly, even though they’ll never get any credit for it. Of course, another motivation is that it is a great way to learn how to write good proposals themselves for future competitions.

In fact, the weeks-long review process is just the tip of the iceberg, in terms of the effort spent in this selection process. The proposals to be reviewed are each hundreds of pages long, the result of months of frenzied design and formulation work by scientists and engineers, followed by rigorous costing and schedule planning. Details include not just what we want to study (Io volcanoes, say), with what instruments, and what spacecraft design will carry them, but exactly where parts will come from, when, how much they cost, how they’ll be tested, and so on. How much data will be produced, and which Deep Space Network antenna it’ll be received at, and who will analyze the data, and what experience they have? In the original Discovery program, proposals were manageably short, but perhaps the consequence of some kind of bureaucratic Second Law, the proposal requirements have become more and more rigorous. Assembling a credible proposal is now a million-dollar gamble.

Interestingly, our evolution shows itself in how the proposers work. As hunter-gatherers, our sense of space is highly developed, and this ability is exploited as the proposal comes together. Usually there are hundreds of details that may appear in or affect different parts of the proposal—the power for an instrument, say—and as the design evolves, different sections get out of sync. This sort of inconsistency is just what reviewers love to catch, so how to track them? Our memory for page and section numbers (which are always changing anyway) is limited, but by printing the proposal out and pinning it up on the wall, we start to develop a spatial mapping of the information: I don’t know if that Mars encounter date is in Section E.4 or Section F.3, but I know it was over in that corner of the room!

As I wrote this rumination on the process earlier this year, there are probably two or three dozen rooms in NASA centers, aerospace companies, and a few universities decked with what is probably among the world’s most expensive wallpaper. The proposals are subjected to grueling rounds of internal “Red Team” reviews to catch mistakes before they go out the door. Every diagram, every sentence, is argued over, abbreviated, deleted, reinstated.

| Is all this competition wasteful? Perhaps, but to do anything else would be to exclude creative ideas or disenfranchise some organizations or scientists. |

Also, a rare ability in the animal kingdom is that of modeling the perceptions of other individuals. This mark of intelligence, shown by some apes and cuttlefish as well as proposing scientists, comes into play in this process. Proposers ask themselves, what will the reviewers be looking for? Are there features of our anticipated competitors that we can slyly denigrate in our proposal? Is it worth spending limited page-space on that, or on giving more details about our plans? Was it good enough that a key number is given only in a foldout or figure caption somewhere, or do we need to put it in the text as well to make sure they find it? It is like writing poetry and playing chess at the same time, by committee.

And for most of these teams, it will be all for naught. Later this year, using or ignoring the reviewers’ assessments, NASA Headquarters may pick three or four missions for more detailed “Phase A” study, after which another, even more grueling, review process takes place before one or maybe two missions actually make it to the launch pad, about seven years from now. Is all this competition wasteful? Perhaps, but to do anything else would be to exclude creative ideas or disenfranchise some organizations or scientists. Lacking an omniscient authority to choose between missions to planets (ironically named after gods!), the community willingly embraces competitive selection. It seems like the least-worst system.

Red in tooth and claw, it is a busy year for proposers and reviewers alike. But only out of such pressure do the best, most creative, concepts for planetary exploration emerge for NASA to choose from, later this year. May the best mission win!