Recalculating riskby Jeff Foust

|

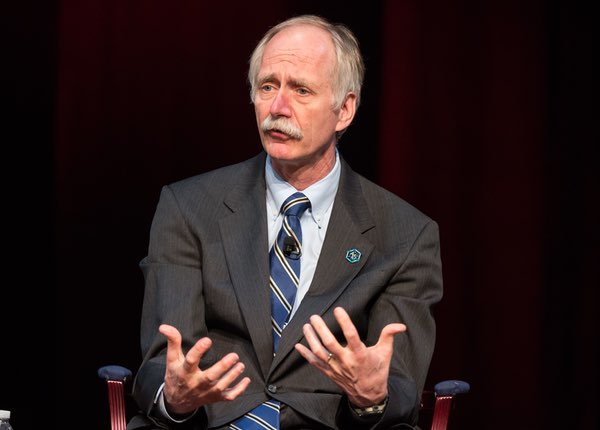

| The topic of risk “is one that we spend a lot of time and energy on within NASA, but we probably don’t spend as much time with the public,” Gerstenmaier said. |

That issue of risk is coming back to the fore with new spacecraft and NASA’s plans for human missions beyond Earth orbit—eventually to Mars, via cislunar space, depending on what the new administration plans to do. Within the next two years, Boeing and SpaceX will fly commercial vehicles intended to ferry NASA astronauts to and from the ISS. NASA will also fly an uncrewed Orion on the first Space Launch System rocket, in preparation for a crewed flight into cislunar space as soon as 2021.

In a speech last week, a top NASA official indicated that the agency was rethinking how it evaluated the risk of flying those vehicles, in an attempt to learn the lessons from the past.

The topic of risk “is one that we spend a lot of time and energy on within NASA, but we probably don’t spend as much time with the public,” said Bill Gerstenmaier, NASA associate administrator for human exploration and operations, in a speech February 7 at the 20th Annual Commercial Space Transportation Conference in Washington.

Gerstenmaier said he wanted to talk about risk because of the pending introduction of new human spaceflight systems. “It will be an enormous achievement for NASA and for our industry partners, but it comes with a substantial element of risk, which must be acknowledged,” he said. “We never simply accept it, but NASA, our stakeholders, and the public must acknowledge the risk as we move forward and we field these new systems.”

In his speech, he said that NASA and industry should work to reduce risk in future human spaceflight systems, but that it was not practical to eliminate it. He cited a passage in the report by the Columbia Accident Investigation Board that examined the issue: “While risk can often be reduced or controlled, there comes a point where the removal of all risk is either impossible or so impractical that it completely undermines the nature of what NASA was created to do, and that is to pioneer the future.”

How, then, do you make the judgments of which specific risks to eliminate and which to accept in a spaceflight system? And, for that matter, how do you quantify those risks?

One metric that long has been used by NASA is known simply as “loss of crew”: the odds a vehicle will suffer an accident that results in the deaths of those on board. But, Gerstenmaier argued in his speech, it may be too simple.

“Risk cannot be boiled down to a simple statistic,” he said. “Some people also talk about these things as if they were simple. But, designing human space transportation systems, and the risk associated with operating them, is not simple.”

Gerstenmaier noted that, at the end of the shuttle program in 2011, the loss-of-crew statistic for the shuttle was 1-in-90. “Essentially, that means there was a high likelihood that we would lose a crew in 90 missions, statistically speaking,” he said.

He contrasted that calculation with the loss-of-crew estimates at the beginning of the shuttle program: models prior to the first shuttle missions offered estimates between 1-in-500 and 1-in-5,000. “Later, we were able to update the models with actual flight data,” he said. “It turned out we were really flying STS-1 with a 1-in-12 potential loss of crew.”

| “I really don’t have a better method than to use this as a absolute measure of safety,” he said of the loss-of-crew odds. “We just need to be careful when we discuss these numbers.” |

He also suggested the loss-of-crew statistic could be gamed, in a sense, by taking steps to improve that metric without actually improving vehicle safety. For example, he said, adding redundancy to a failure mode can provide “a nice bump” in that loss of crew estimate, he said. “However, by adding that backup system, you’re increasing the complexity,” he said. “You’re increasing the possibility that there’s some potential interaction among the subsystems that could lead to an unknown failure mode.”

Added to that are uncertainties in those loss-of-crew calculations: a vehicle with a 1-in-75 figure might be safer than one with a 1-in-100 if the latter had a larger statistical uncertainty. Those uncertainties, though, are often left out when discussing estimates of the loss of crew.

Why use the loss-of-crew statistic at all, then? Gerstenmaier, more than once in his speech, suggested it was the fault of policymakers who want to keep things simple—or, he argued, too simple. “Most of us here live and work in Washington DC, where people like things simple,” he said. “Perhaps because of this, some people also talk about these things as if they were simple.”

The loss-of-crew metric, he said, is good for measurements of relative risk—measuring one design versus another—but not for absolute risk. For now, though, it’s the best that is available, he said, and one that NASA is using for the commercial crew program, with a goal of 1-in-275 for those vehicles.

“I really don’t have a better method than to use this as a absolute measure of safety,” he said, acknowledging that single figure is inadequate to capture all the complexities of those vehicles. “We just need to be careful when we discuss these numbers.”

That also means paying closer attention to “close calls” with future vehicles, treating them as “gifts from god” to be used to improve vehicles, rather than ignoring them, as happened with the shuttle. “There will be close calls, and we must learn from them,” he said. That also means that vehicles can’t be considered “operational” after a set number of flights, another shuttle-era lesson learned.

In a comparison of risks, Gerstenmaier said that, last year, more than 700 people died in bicycle accidents in the United States. “That’s quite a number for a relatively benign form of transportation,” he said. (Of course, orders of magnitude more people rode bicycles than flew into space last year as well.)

The message, he said, was that even relatively mature, safe modes of transportation still carry with them risks, risks that society has chosen to accept. “We need to do everything possible to prevent loss, but recognize that it might happen,” he said. “This is not a total failure of our design, or of our system, or of our processes.”

The benefits of spaceflight, he concluded, outweighed the risks. “We will work carefully and diligently to mitigate those risks, but a failure will inevitably occur at some point,” he said. “We cannot let that shake our resolve.”